What Is a Robots.txt File?

A robots.txt file is a set of instructions that tell search engines which pages to crawl and which pages to avoid, guiding crawler access but not necessarily keeping pages out of Google’s index.

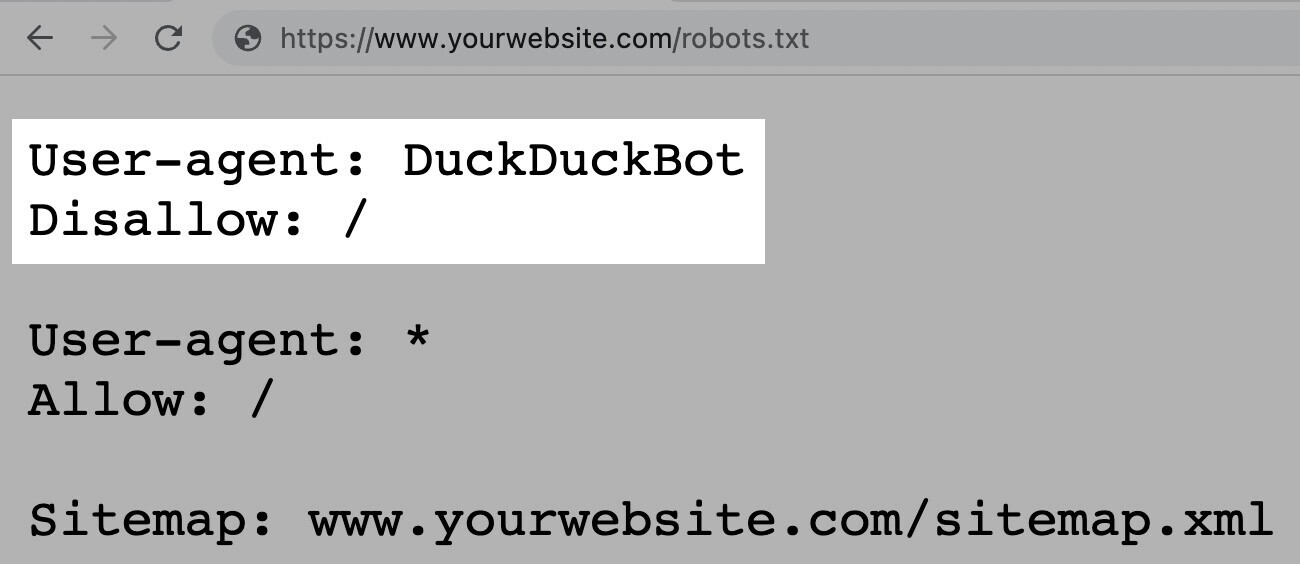

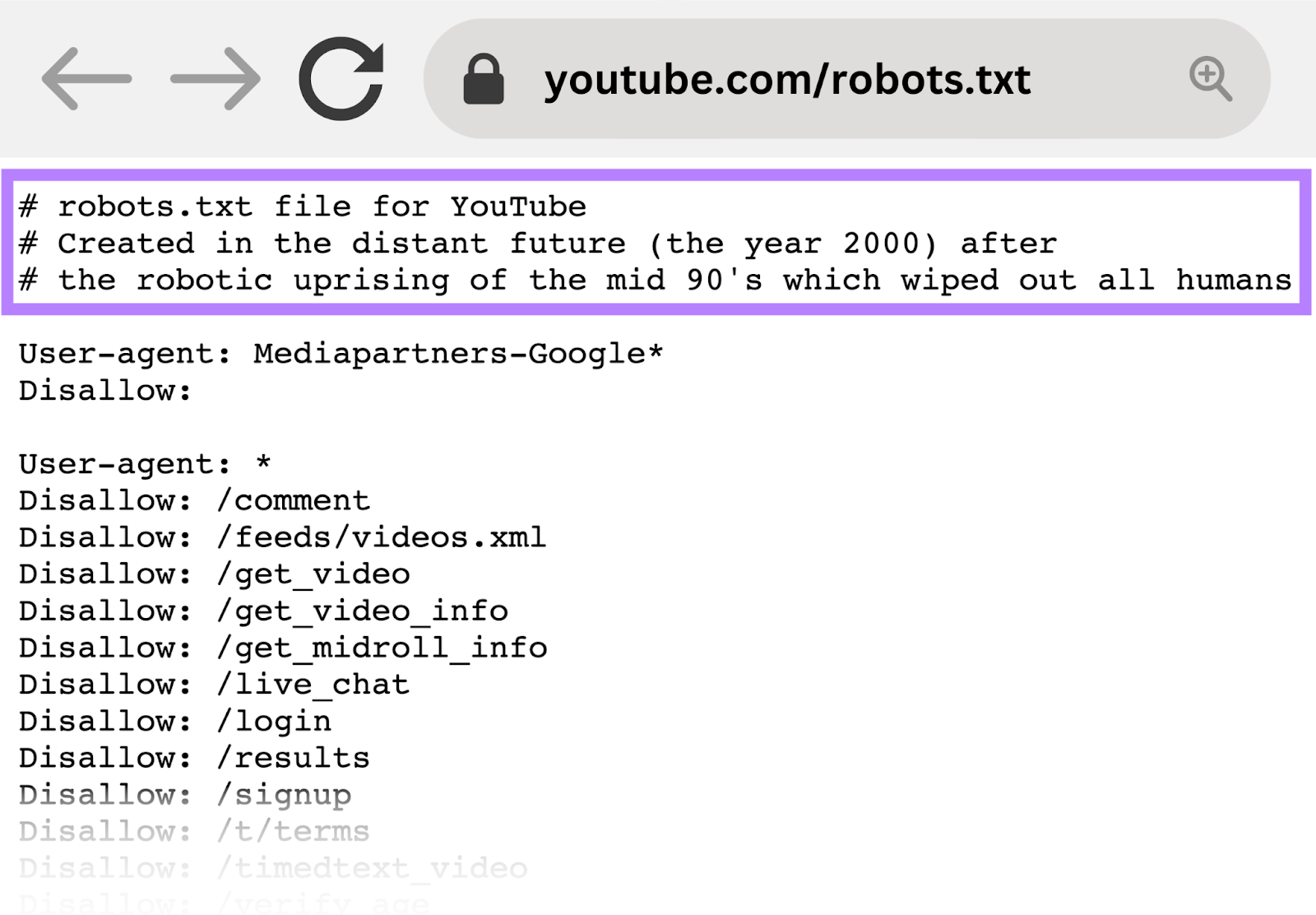

A robots.txt file looks like this:

Robots.txt files may seem complicated. However, the syntax (computer language) is straightforward.

Before explaining the details of robots.txt, we’ll clarify how robots.txt differs from other terms that sound similar.

Robots.txt vs. Meta Robots vs. X-Robots

Robots.txt files, meta robots tags, and x-robots tags guide search engines in handling site content but differ in their level of control, whether they're located, and what they control.

Consider these specifics:

- Robots.txt: This file is located in the website’s root directory and provides site-wide instructions to search engine crawlers on which areas of the site they should and shouldn’t crawl

- Meta robots tags: These tags are snippets of code in the <head> section of individual webpages and provide page-specific instructions to search engines on whether to index (include in search results) and follow (crawl the links on) each page

- X-robot tags: These code snippets are used primarily for non-HTML files, such as PDFs and images and are implemented in the file’s HTTP header

Further reading: Meta Robots Tag & X-Robots-Tag Explained

Why Is Robots.txt Important for SEO?

A robots.txt file is important for SEO because it helps manage web crawler activities to prevent them from overloading your website and crawling pages not intended for public access.

Below are a few reasons to use a robots.txt file:

1. Optimize Crawl Budget

Blocking unnecessary pages with robots.txt allows Google’s web crawler to spend more crawl budget (how many pages Google will crawl on your site within a certain time frame) on pages that matter.

Crawl budget can vary based on your site’s size, health, and number of backlinks.

If your site has more pages than its crawl budget, important pages may fail to get indexed.

Unindexed pages won’t rank, which means you’ve wasted time creating pages that users never see in search results.

2. Block Duplicate and Non-Public Pages

Not all pages are intended for inclusion in the search engine results pages (SERPs), and a robots.txt file lets you block those non-public pages from crawlers.

Consider staging sites, internal search results pages, duplicate pages, or login pages. Some content management systems handle these internal pages automatically.

WordPress, for example, disallows the login page “/wp-admin/” for all crawlers.

3. Hide Resources

Robots.txt lets you exclude resources like PDFs, videos, and images from crawling if you want to keep them private or have Google focus on more important content.

How Does a Robots.txt File Work?

A robots.txt file tells search engine bots which URLs to crawl and (more importantly) which URLs to avoid crawling.

When search engine bots crawl webpages, they discover and follow links. This process leads them from one site to another across various pages.

If a bot finds a robots.txt file, it reads that file before crawling any pages.

The syntax is straightforward. You assign rules by identifying the user-agent (the search engine bot) and specifying directives (the rules).

You can use an asterisk (*) to assign directives to all user-agents at once.

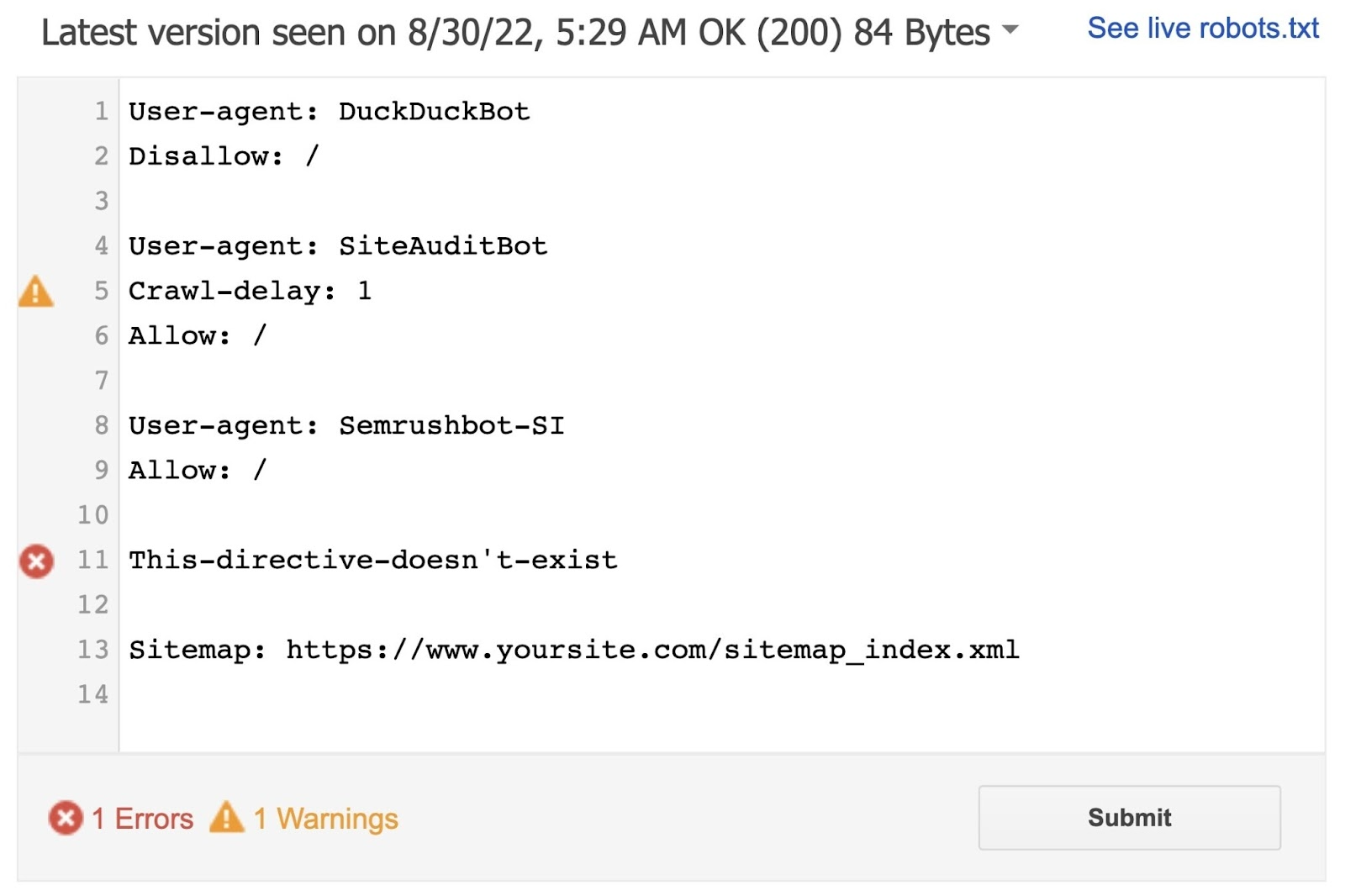

For example, the following instruction allows all bots except DuckDuckGo to crawl your site:

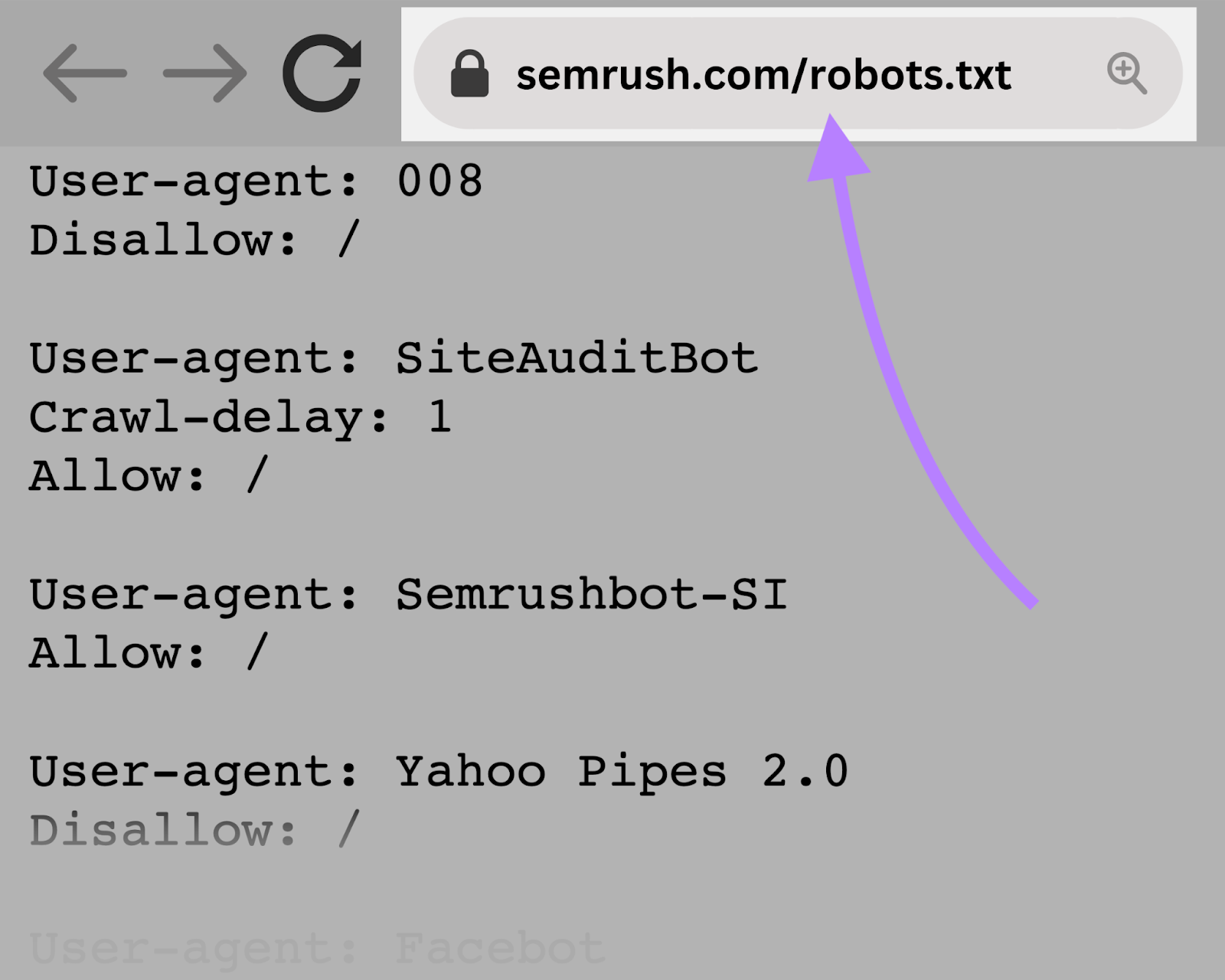

Semrush bots crawl the web to gather insights for our website optimization tools, such as Site Audit, Backlink Audit, and On Page SEO Checker.

Semrush bots respect the rules in your robots.txt file, meaning they won’t crawl your site if you block Semrush bots from crawling.

However, blocking Semrush bots limits the use of certain Semrush tools.

For example, if you block the SiteAuditBot from crawling your site, you can’t audit your site with the Site Audit tool. This tool helps analyze and fix technical issues on your site.

If you block the SemrushBot-SI from crawling your site, you can’t use the On Page SEO Checker tool effectively.

As a result, you lose the opportunity to generate optimization ideas that could improve your webpages’ rankings.

How to Find a Robots.txt File

Your robots.txt file is hosted on your server, just like other files on your website.

You can view any website’s robots.txt file by typing the site’s homepage URL into your browser and adding “/robots.txt” at the end.

For example: “https://semrush.com/robots.txt.”

Examples of Robots.txt Files

Here are some real-world robots.txt examples from popular websites.

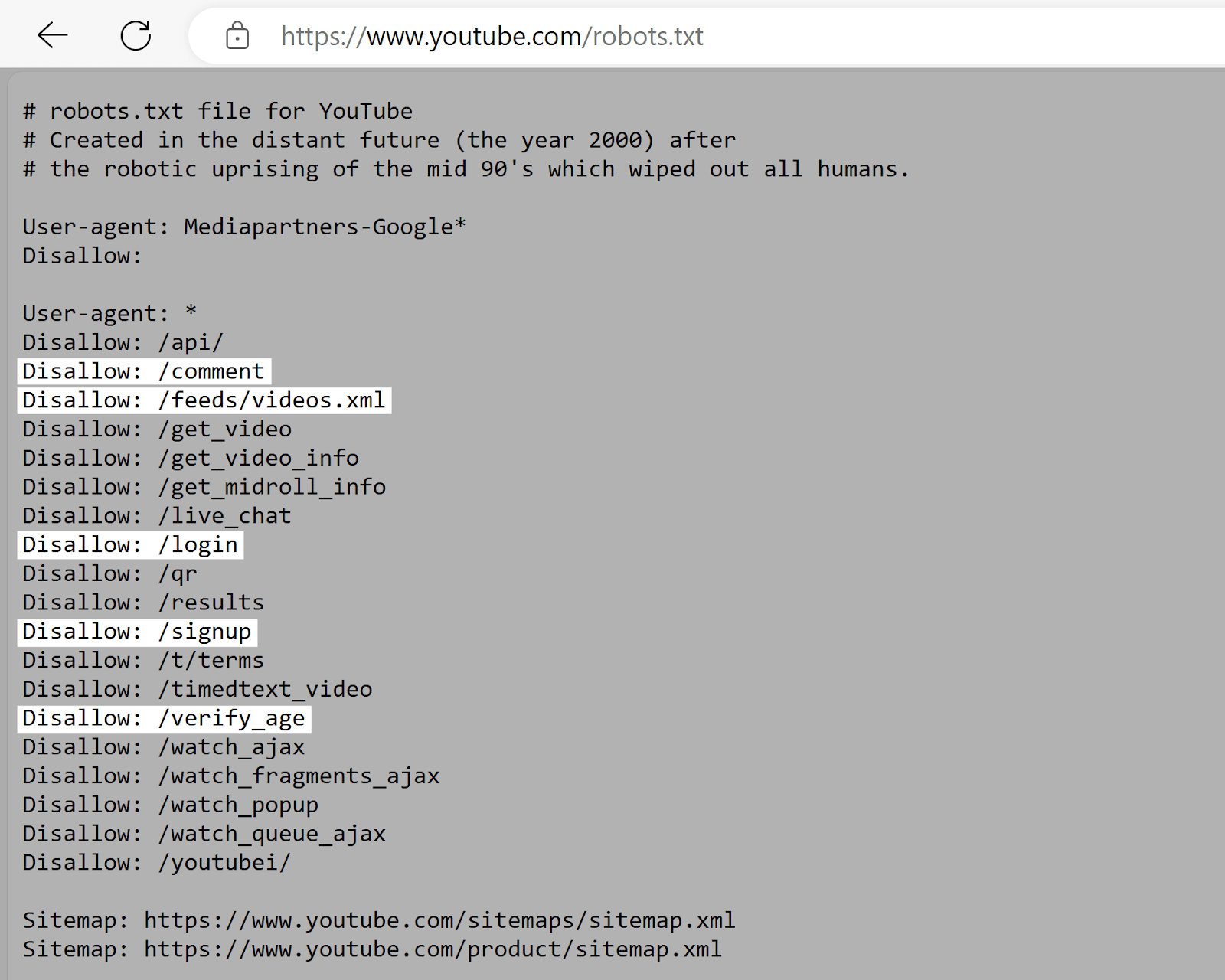

YouTube

YouTube’s robots.txt file tells crawlers not to access user comments, video feeds, login/signup pages, and age verification pages.

The rules in YouTube’s robots.txt file discourages indexing user-specific or dynamic content that doesn’t help search results and may raise privacy concerns.

G2

G2’s robots.txt file tells crawlers not to access sections with user-generated content, like survey responses, comments, and contributor profiles.

The rules in G2’s robots.txt files help protect user privacy by restricting access to potentially sensitive personal information. The rules also prevent attempts to manipulate search results.

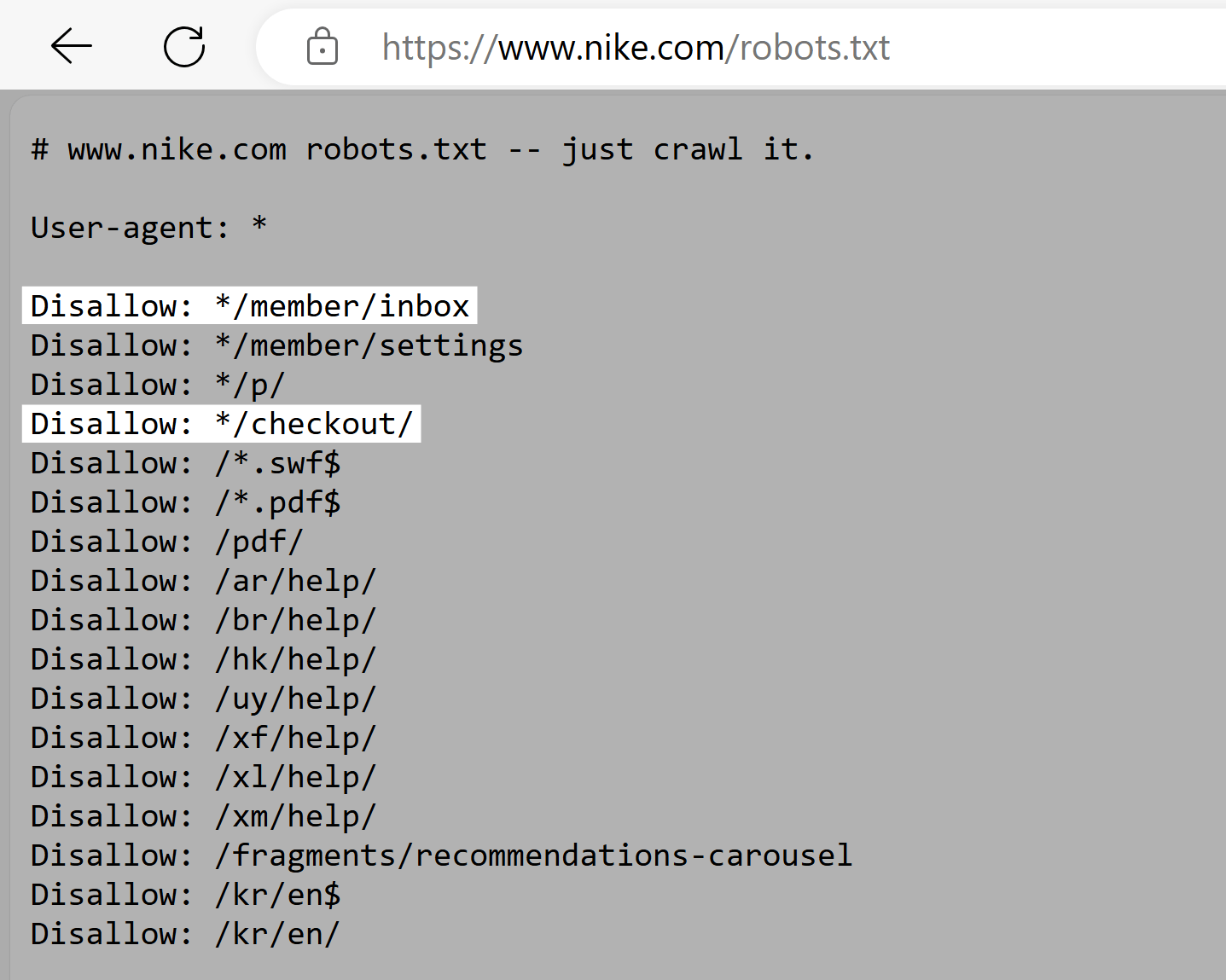

Nike

Nike’s robots.txt file uses the disallow directive to block crawlers from accessing user-generated directories, like “/checkout/” and “*/member/inbox.”

The rules in Nike’s robots.txt file prevent sensitive user data from appearing in search results and reduce opportunities to manipulate SEO rankings.

Search Engine Land

Search Engine Land’s robots.txt file uses the disallow tag to discourage indexing of “/tag/” directory pages, which often have low SEO value and can cause duplicate content issues.

The rules in Search Engine Land’s robots.txt file encourage search engines to focus on higher-quality content and optimize the site’s crawl budget—something especially important for large websites like Search Engine Land.

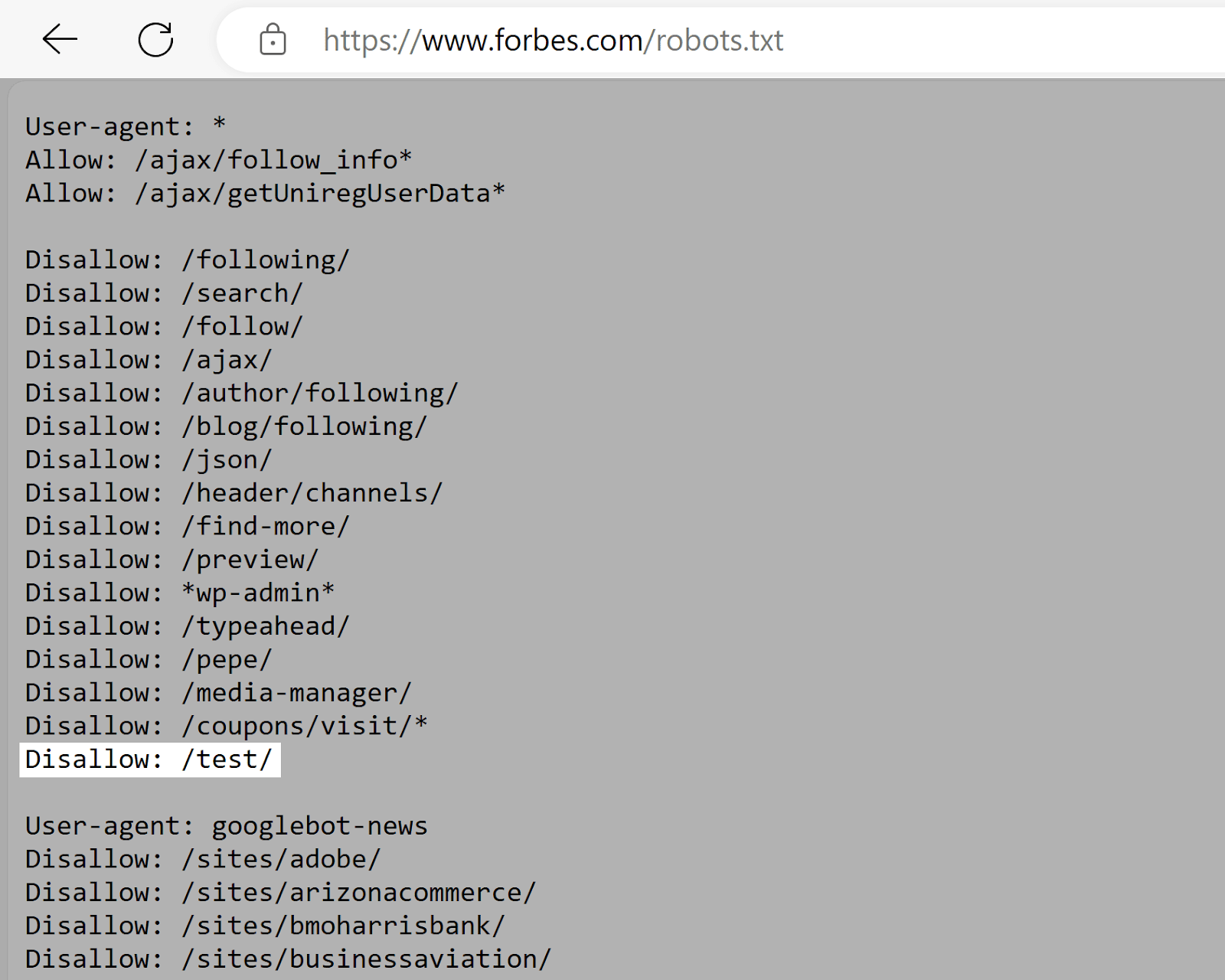

Forbes

Forbes’s robots.txt file instructs Google not to crawl the “/test/” directory, which likely contains testing or staging environments.

The rules in Forbes’s robots.txt file prevent unfinished or sensitive content from being indexed, assuming it’s not linked to from elsewhere.

Explaining Robots.txt Syntax

A robots.txt file consists of one or more directive blocks, with each block specifying a user-agent (a search engine bot) and providing “allow” or “disallow” instructions.

A simple block may look like this:

User-agent: Googlebot

Disallow: /not-for-google

User-agent: DuckDuckBot

Disallow: /not-for-duckduckgo

Sitemap: https://www.yourwebsite.com/sitemap.xmlThe User-Agent Directive

The first line of each directive block specifies the user-agent, which identifies the crawler.

For example, use these lines to prevent Googlebot from crawling your WordPress admin page:

User-agent: Googlebot

Disallow: /wp-admin/When multiple directives exist, a bot may choose the most specific one.

Imagine you have three sets of directives: one for *, one for Googlebot, and one for Googlebot-Image.

If the Googlebot-News user agent crawls your site, it will follow the Googlebot directives.

However, the Googlebot-Image user agent will follow the more specific Googlebot-Image directives.

The Disallow Robots.txt Directive

The disallow directive lists parts of the site a crawler shouldn’t access.

An empty disallow line means no restrictions exist.

For example, the below rule allows all crawlers access to your entire site:

User-agent: *

Allow: /To block all crawlers from your entire site, use the below block:

User-agent: *

Disallow: /The Allow Directive

The allow directive allows search engines to crawl a subdirectory or specific page, even in an otherwise disallowed directory.

For example, use the below rule to prevent Googlebot from accessing all blog posts except one:

User-agent: Googlebot

Disallow: /blog

Allow: /blog/example-postThe Sitemap Directive

The sitemap directive tells search engines—specifically Bing, Yandex, and Google—where to find your XML sitemap (a file that lists all the pages you want search engines to index).

The image below shows what the sitemap directive looks like:

Including a sitemap directive in your robots.txt file is a quick way to share your sitemap.

However, you should also submit your XML sitemap directly to search engines via their webmaster tools to speed up crawling.

The Crawl-Delay Directive

The crawl-delay directive tells crawlers how many seconds to wait between requests, which helps avoid server overload.

Google no longer supports the crawl-delay directive. To set crawl rates for Googlebot, use Google Search Console.

Bing and Yandex do support the crawl-delay directive.

For example, use the below rule to set a 10-second delay after each crawl action:

User-agent: *

Crawl-delay: 10Further reading: 15 Crawlability Problems & How to Fix Them

The Noindex Directive

A robots.txt file tells search engines what to crawl and what not to crawl but can’t reliably keep a URL out of search results—even if you use a noindex directive.

If you use noindex in robots.txt, the page can still appear in search results without visible content.

Google never officially supported the noindex directive in robots.txt and confirmed so in September 2019.

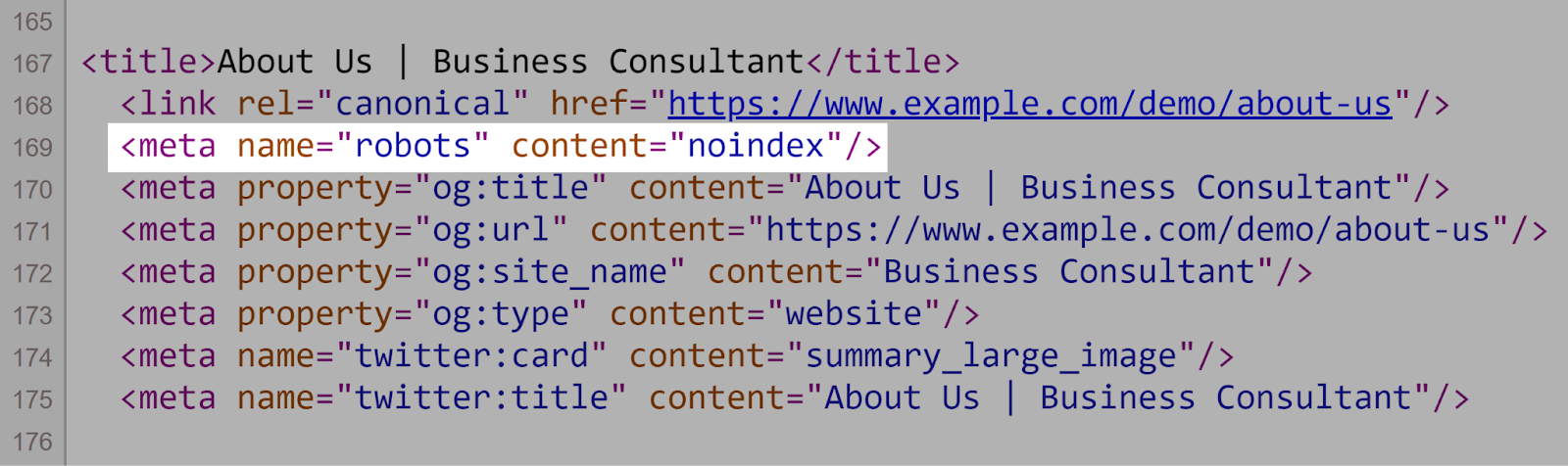

To reliably exclude a page from search results, use a meta robots noindex tag instead.

How to Create a Robots.txt File

Use a robots.txt generator tool to quickly create a robots.txt file.

Follow these steps to create a robotx.txt file from scratch:

1. Create a File and Name It Robots.txt

Open a .txt document in a text editor or web browser.

Name the document “robots.txt.”

You can now start typing directives.

2. Add Directives to the Robots.txt File

A robots.txt file contains one or more groups of directives, and each group includes multiple lines of instructions.

Each group starts with a user-agent and specifies:

- Who the group applies to (the user-agent)

- Which directories (pages) or files the agent should access

- Which directories (pages) or files the agent shouldn’t access

- A sitemap (optional) to tell search engines which pages and files you deem important

Crawlers ignore lines that don’t match the above directives.

Imagine you don’t want Google to crawl your “/clients/” directory because it’s for internal use only.

The first group in your file would look like this block:

User-agent: Googlebot

Disallow: /clients/You can add more instructions for Google after that, like the one below:

User-agent: Googlebot

Disallow: /clients/

Disallow: /not-for-googleThen press enter twice to start a new group of directives.

Now imagine you want to prevent access to “/archive/” and “/support/” directories for all search engines.

A block preventing access to those :

User-agent: Googlebot

Disallow: /clients/

Disallow: /not-for-google

User-agent: *

Disallow: /archive/

Disallow: /support/Once you’re finished, add your sitemap:

User-agent: Googlebot

Disallow: /clients/

Disallow: /not-for-google

User-agent: *

Disallow: /archive/

Disallow: /support/

Sitemap: https://www.yourwebsite.com/sitemap.xmlSave the file as “robots.txt.”

3. Upload the Robots.txt File

After saving your robots.txt file, upload the file to your site so search engines can find it.

The process of uploading your robots.txt file depends on your hosting environment.

Search online or contact your hosting provider for details.

For example, search “upload robots.txt file to [your hosting provider]” for platform-specific instructions.

Below are some links explaining how to upload robots.txt files to popular platforms:

- Robots.txt in WordPress

- Robots.txt in Wix

- Robots.txt in Joomla

- Robots.txt in Shopify

- Robots.txt in BigCommerce

After uploading, confirm that the file is accessible and that Google can read it.

4. Test Your Robots.txt File

First, verify that anyone can view your robots.txt file by opening a private browser window and entering your sitemap URL.

For example, “https://semrush.com/robots.txt.”

If you see your robots.txt content, test the markup.

Google provides two testing options:

- The robots.txt report in Search Console

- Google’s open-source robots.txt library (advanced)

Use the robots.txt report in Search Console if you are not an advanced user.

Open the robots.txt report.

If you haven’t linked your site to Search Console, add a property and verify site ownership first.

If you already have verified properties, select one from the drop-down after opening the robots.txt report.

The tool reports syntax warnings and errors.

Edit errors or warnings directly on the page and retest as you go.

Changes made within the robots.txt report aren’t saved to your site’s live robots.txt file, so copy and paste corrected code into your actual robots.txt file.

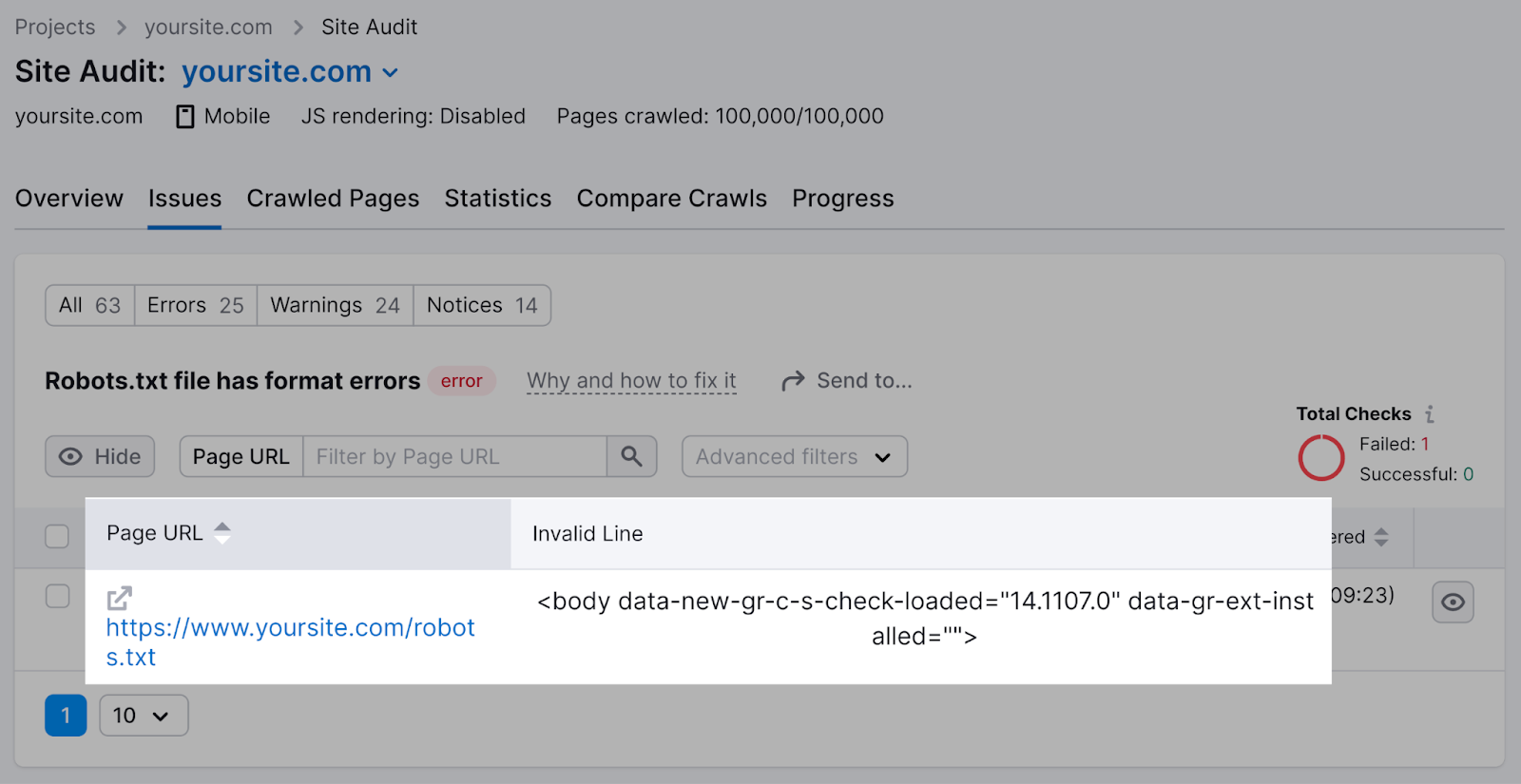

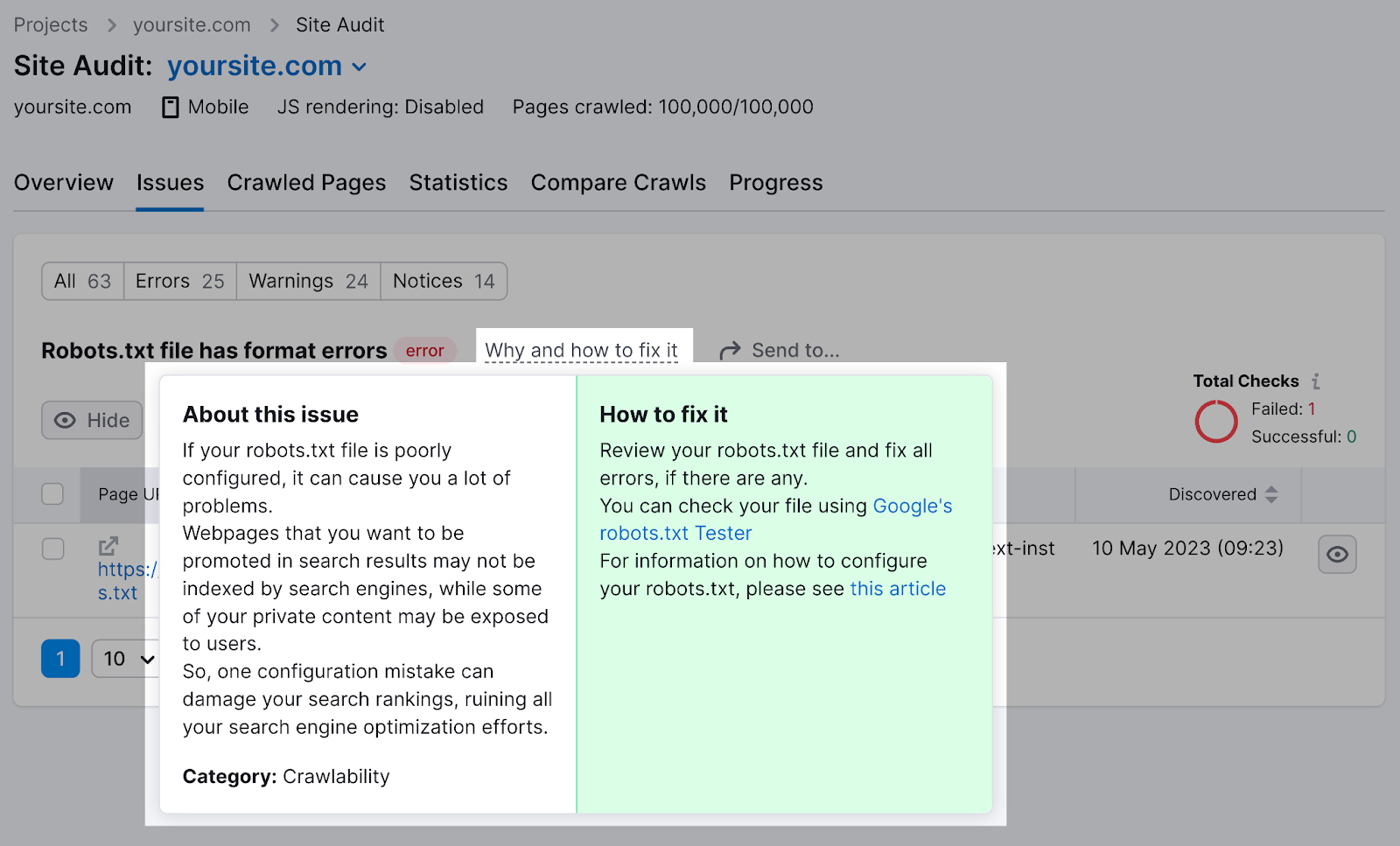

Semrush’s Site Audit tool can also check for robots.txt issues.

Set up a project and run an audit.

When the tool is ready, navigate to the “Issues” tab and search for “robots.txt.”

Click “Robots.txt file has format errors” if it appears.

View the list of invalid lines.

Click “Why and how to fix it” for specific instructions.

Check your robots.txt file regularly. Even small errors can affect your site’s indexability.

Robots.txt Best Practices

Use a New Line for Each Directive

Place each directive on its own line to ensure search engines can read them and follow the instructions.

Incorrect example:

User-agent: * Disallow: /admin/

Disallow: /directory/Correct example:

User-agent: *

Disallow: /admin/

Disallow: /directory/Use Each User-Agent Only Once

List each user-agent once to keep the file organized and reduce the risk of human error.

Confusing example:

User-agent: Googlebot

Disallow: /example-page

User-agent: Googlebot

Disallow: /example-page-2Clear example:

User-agent: Googlebot

Disallow: /example-page

Disallow: /example-page-2Writing all directives under the same user-agent is cleaner and helps you stay organized.

Use Wildcards to Clarify Directions

Use wildcards (*) to apply directives broadly.

To prevent search engines from accessing URLs with parameters, you could technically list them out one by one.

However, you can simplify your directions with a wildcard.

Inefficient example:

User-agent: *

Disallow: /shoes/vans?

Disallow: /shoes/nike?

Disallow: /shoes/adidas?Efficient example:

User-agent: *

Disallow: /shoes/*?The above example blocks all search engine bots from crawling all URLs under the “/shoes/” subfolder with a question mark.

Use ‘$’ to Indicate the End of a URL

Use “$” to indicate the end of a URL.

To block search engines from crawling all of a certain file type, using “$” helps you avoid listing all the files individually.

Inefficient:

User-agent: *

Disallow: /photo-a.jpg

Disallow: /photo-b.jpg

Disallow: /photo-c.jpgEfficient:

User-agent: *

Disallow: /*.jpg$Use “$” carefully because mistakes can lead to accidental unblocking.

Use the Hash Symbol to Add Comments

Add comments by starting a line with “#”—crawlers ignore anything that starts with a hash.

For example:

User-agent: *

#Landing Pages

Disallow: /landing/

Disallow: /lp/

#Files

Disallow: /files/

Disallow: /private-files/

#Websites

Allow: /website/*

Disallow: /website/search/*Developers sometimes add humorous comments using hashes since most users never see the file.

For example, YouTube’s robots.txt file reads: “Created in the distant future (the year 2000) after the robotic uprising of the mid 90’s which wiped out all humans.”

And Nike’s robots.txt reads “just crawl it” (a nod to its “just do it” tagline) and features the brand’s logo.

Use Separate Robots.txt Files for Different Subdomains

Robots.txt files only control crawling on the subdomain where they reside, which means you may need multiple files.

If your site is “domain.com” and your blog is “blog.domain.com,” create a robots.txt file for both the domain’s root directory and the blog’s root directory.

5 Robots.txt Mistakes to Avoid

When creating your robots.txt file, watch out for the following common mistakes:

1. Not Including Robots.txt in the Root Directory

Your robots.txt file must be located in your site’s root directory to ensure search engine crawlers can find it easily.

For example, if your website’s homepage is “www.example.com,” place the file at “www.example.com/robots.txt.”

If you put it in a subdirectory, like “www.example.com/contact/robots.txt,” search engines may not find it and could assume you haven’t set any crawling instructions.

2. Using Noindex Instructions in Robots.txt

Don’t use noindex instructions in robots.txt—Google doesn’t support the noindex rule in the robots.txt file.

Instead, use meta robots tags (e.g., <meta name="robots" content="noindex">) on individual pages to control indexing.

3. Blocking JavaScript and CSS

Avoid blocking access to JavaScript and CSS files via robots.txt unless necessary (e.g., restricting access to sensitive data).

Blocking crawling of JavaScript and CSS files makes it difficult for search engines to understand your site’s structure and content, which can harm your rankings.

Further reading: JavaScript SEO: How to Optimize JS for Search Engines

4. Not Blocking Access to Your Unfinished Site or Pages

Block search engines from crawling unfinished versions of your site to keep it from being found before you’re ready (also use a meta robots noindex tag for each unfinished page).

Search engines crawling and indexing an in-development page may lead to a poor user experience and potential duplicate content issues.

Using robots.txt to keep unfinished content private until you’re ready to launch.

5. Using Absolute URLs

Use relative URLs in your robots.txt file to make it easier to manage and maintain.

Absolute URLs are unnecessary and can cause errors if your domain changes.

❌ Example with absolute URLs (not recommended):

User-agent: *

Disallow: https://www.example.com/private-directory/

Disallow: https://www.example.com/temp/

Allow: https://www.example.com/important-directory/✅ Example with relative URLs (recommended):

User-agent: *

Disallow: /private-directory/

Disallow: /temp/

Allow: /important-directory/Keep Your Robots.txt File Error-Free

Now that you understand how robots.txt files work, you should ensure yours is optimized. Even small mistakes can affect how your site is crawled, indexed, and displayed in search results.

Semrush’s Site Audit tool makes analyzing your robots.txt file for errors easy and provides actionable recommendations to fix any issues.