Why do working pages on my website appear as broken?

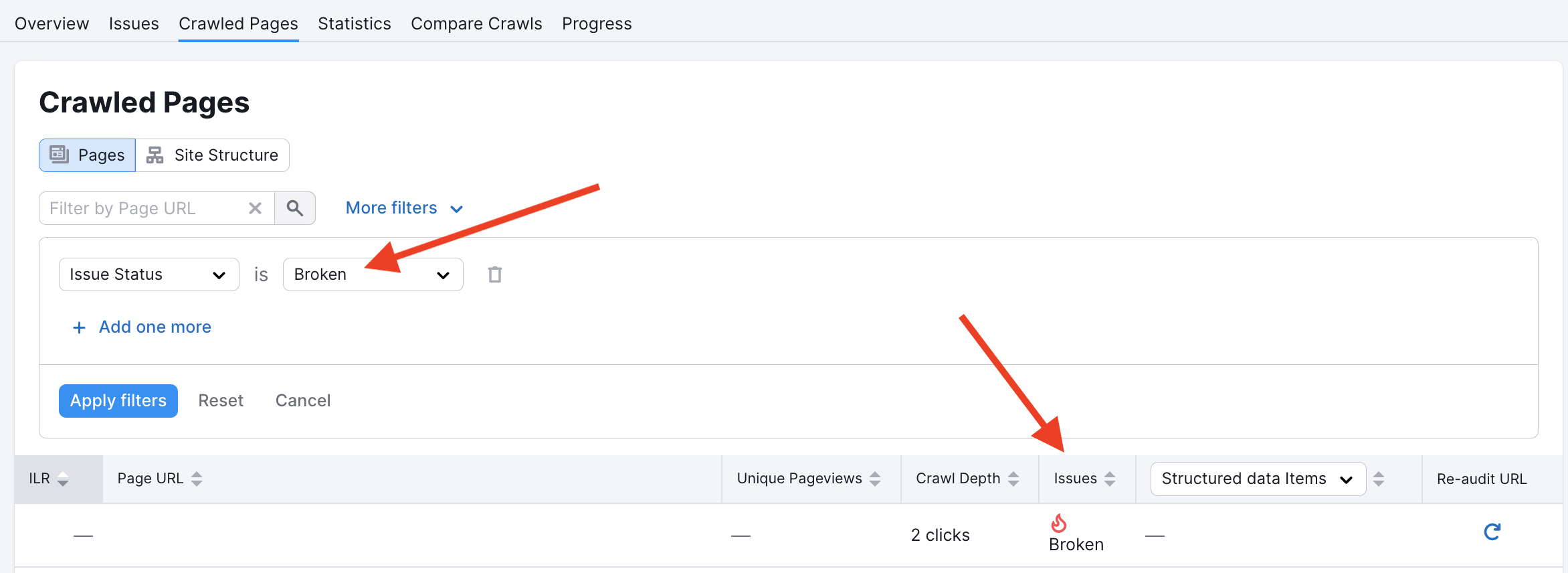

There may be a time where Site Audit may show that some of your internal pages are reported as broken but are actually still fully up-and-running. While this does not happen often, it can be confusing for some users.

Generally, when this happens it's because of a false-positive. The three most common reasons for a false-positive reading are:

- Our Site Audit crawler could have been blocked for some pages in robots.txt or by noindex tags

- Hosting providers might block Semrush bots as they believe it is a DDoS attack (massive amount of hits during a short period of time)

- At the moment of the campaign re-crawl, the domain could not be resolved by DNS

- Website server cache storing old data and providing it to crawler bots

If you believe it’s happening because of a crawler issue you can learn how to troubleshoot your robots.txt in this article.

You can also lower the crawl speed in order to avoid a large number of hits on your pages at one time. That is why you could see this page as a working one, which is right, and our bot was unable to do so and reported the false-positive result.

To solve the problem with the server cache, please try clearing it and then re-running Site Audit again — this will bring you updated results that include all your fixes.

- What Issues Can Site Audit Identify?

- How many pages can I crawl in a Site Audit?

- How long does it take to crawl a website? It appears that my audit is stuck.

- How do I audit a subdomain?

- Can I manage the automatic Site Audit re-run schedule?

- Can I set up a custom re-crawl schedule?

- How is Site Health Score calculated in the Site Audit tool?

- How Does Site Audit Select Pages to Analyze for Core Web Vitals?

- How do you collect data to measure Core Web Vitals in Site Audit?

- Why is there a difference between GSC and Semrush Core Web Vitals data?

- Why are only a few of my website’s pages being crawled?

- Why do working pages on my website appear as broken?

- Why can’t I find URLs from the Audit report on my website?

- Why does Semrush say I have duplicate content?

- Why does Semrush say I have an incorrect certificate?

- What are unoptimized anchors and how does Site Audit identify them?

- What do the Structured Data Markup Items in Site Audit Mean?

- Can I stop a current Site Audit crawl?

- Using JS Impact Report to Review a Page

- Configuring Site Audit

- Troubleshooting Site Audit

- Site Audit Overview Report

- Site Audit Thematic Reports

- Reviewing Your Site Audit Issues

- Site Audit Crawled Pages Report

- Site Audit Statistics

- Compare Crawls and Progress

- Exporting Site Audit Results

- How to Optimize your Site Audit Crawl Speed

- How To Integrate Site Audit with Zapier

- JS Impact Report